My function relies on R primitive hyperbolic functions (as the Fisher z transformation is related to the geometry of hyperbolas), which may be useful if you need to use it intensively (e.g., for simulations): GPower: Select t tests from Test family Select Means: difference from constant (one sample case) from Statistical test Select A priori from Type of power analysis Background Info: a) Select One or Two from the Tail(s), depending on type b) Enter 0. A number of functions compute it using the usual text book formula. The cor.test() function in the base package does this for raw data (along with computing the correlation and usual NHST). The effect size is the hypothesized coefficient of determination. Whilst writing the book I encountered several functions do do exactly this. The steps for calculating the sample size for a Pearsons r correlation in GPower are.

#GPOWER PEARSON CORRELATION DEPENDENT R HOW TO#

Section 6.7.5 shows how to write a simple R function and illustrates it with a function to calculate a CI for Pearson’s r using the Fisher z transformation.

#GPOWER PEARSON CORRELATION DEPENDENT R CODE#

224) I illustrate Zou’s approach for independent correlations and provide R code in sections 6.7.5 and 6.7.6 to automate the calculations. He also considered differences in R 2 (not relevant here).

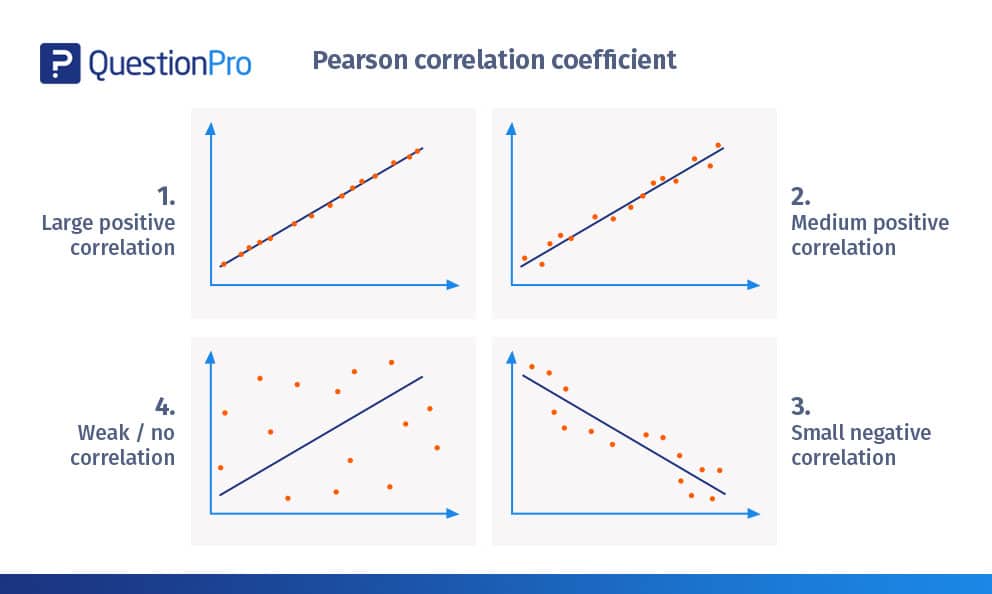

He considered three cases: independent correlations and two types of dependent correlations (overlapping and non-overlapping). Zou (2007) proposed modification to the standard approach that uses the upper and lower bounds of the CIs for individual correlations to calculate a CI for their difference. Knowing r and n (the sample size), we can infer whether is significantly different from 0. It is an estimate of rho ( ), the Pearson correlation of the population. This works well for the CI around a single correlation (assuming the main assumptions – bivariate normality and homogeneity of variance – broadly hold) or for differences between means, but can perform badly when looking at the difference between two correlations. The Pearson correlation of the sample is r. Correlation is simply normalized covariation, and covariation measures how 2 random variables co-variate, that is, how change in one. Pearson, on other hand, defines correlation. As z r is approximately normally distributed (which r is decidedly not) you can create a standard error for the difference by summing the sampling variances according to the variance sum law (see chapter 3). Logistic regression works with both - continuous variables and categorical (encoded as dummy variables), so you can directly run logistic regression on your dataset. Both procedures are Correlations: Two independent Pearson rs (two sam- ples) 9 3.5 Implementation notes Exact distribution. The standard approach uses the Fisher z transformation to deal with boundary effects (the squashing of the distribution and increasing asymmetry as r approaches -1 or 1). In Chapter 6 (correlation and covariance) I consider how to construct a confidence interval (CI) for the difference between two independent correlations.

0 kommentar(er)

0 kommentar(er)